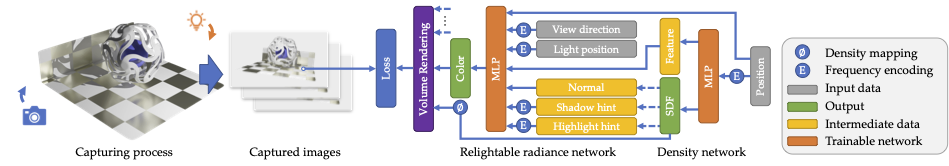

This paper presents a novel neural implicit radiance representation for free viewpoint relighting from a small set of unstructured photographs of an object lit by a moving point light source different from the view position. We express the shape as a signed distance function modeled by a multi layer perceptron. In contrast to prior relightable implicit neural representations, we do not disentangle the different reflectance components, but model both the local and global reflectance at each point by a second multi layer perceptron that, in addition, to density features, the current position, the normal (from the signed distace function), view direction, and light position, also takes shadow and highlight hints to aid the network in modeling the corresponding high frequency light transport effects. These hints are provided as a suggestion, and we leave it up to the network to decide how to incorporate these in the final relit result. We demonstrate and validate our neural implicit representation on synthetic and real scenes exhibiting a wide variety of shapes, material properties, and global illumination light transport.

Complex Ball

Basket

Fur Ball

Metallic Cup & Plane

Anisotropic Metal

Translucent

Drums

Hotdog

Lego

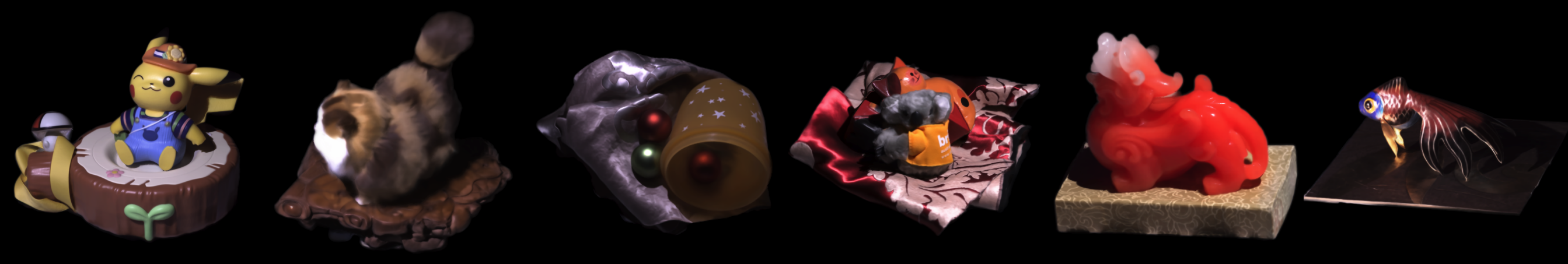

Cat on Decor

Cup & Fabric

Pikachu Statuette

Ornamental Fish

Cluttered Scene

Pixiu Statuette

@inproceedings {zeng2023nrhints,

title = {Relighting Neural Radiance Fields with Shadow and Highlight Hints},

author = {Chong Zeng and Guojun Chen and Yue Dong and Pieter Peers and Hongzhi Wu and Xin Tong},

booktitle = {ACM SIGGRAPH 2023 Conference Proceedings},

year = {2023}

}